↪︎ Chatbot from Scratch

For the course “Deep Learning (INF265)” at the University of Bergen (UiB) in the spring of 2025, I created a project where the goal was to implement a transformer model from scratch and train it on a dataset of question-answer pairs.

The model is a decoder-only transformer that uses causal self-attention to generate answers to short questions. This is similar to the GPT-2 architecture, but much smaller and simpler.

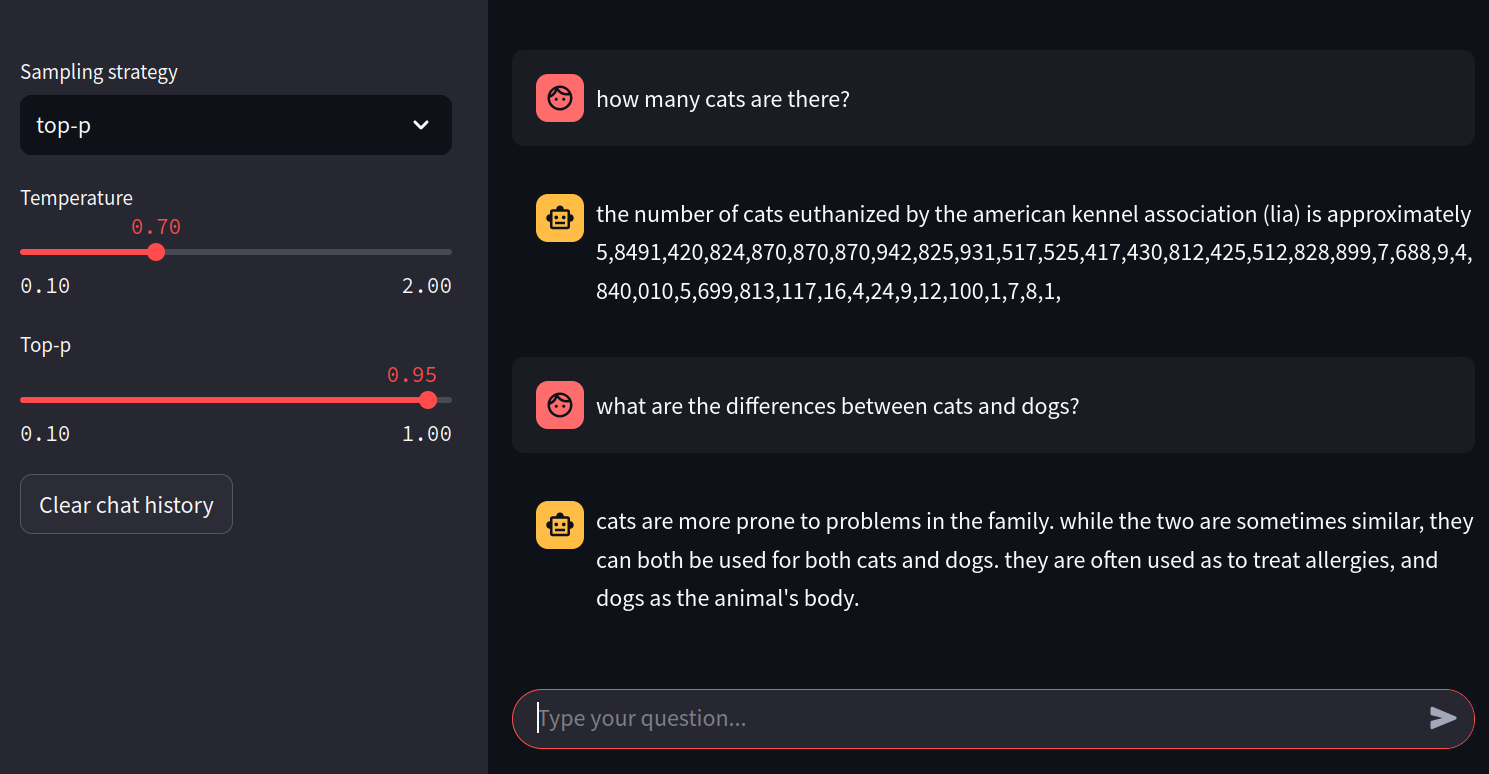

I think this is a good exercise to understand the inner workings of transformer models and how they can be used for text generation tasks. And for fun, I also created a simple chatbot interface using Streamlit to interact with the trained model.

The repository contains the full implementation and a notebook for training on Google Colab in only a few hours.